Having artificial intelligence solve most of today’s issues is no easy feat, especially for social media companies. Facebook came under the spotlight after the Christchurch shootings in March because it was unable to sufficiently and efficiently stop the video from being shared online.

Facebook CEO Mark Zuckerberg told audiences at the F8 conference that the company would tackle violent videos with automation and AI, but now one Facebook exec is saying that it will take years to execute, according to Bloomberg.

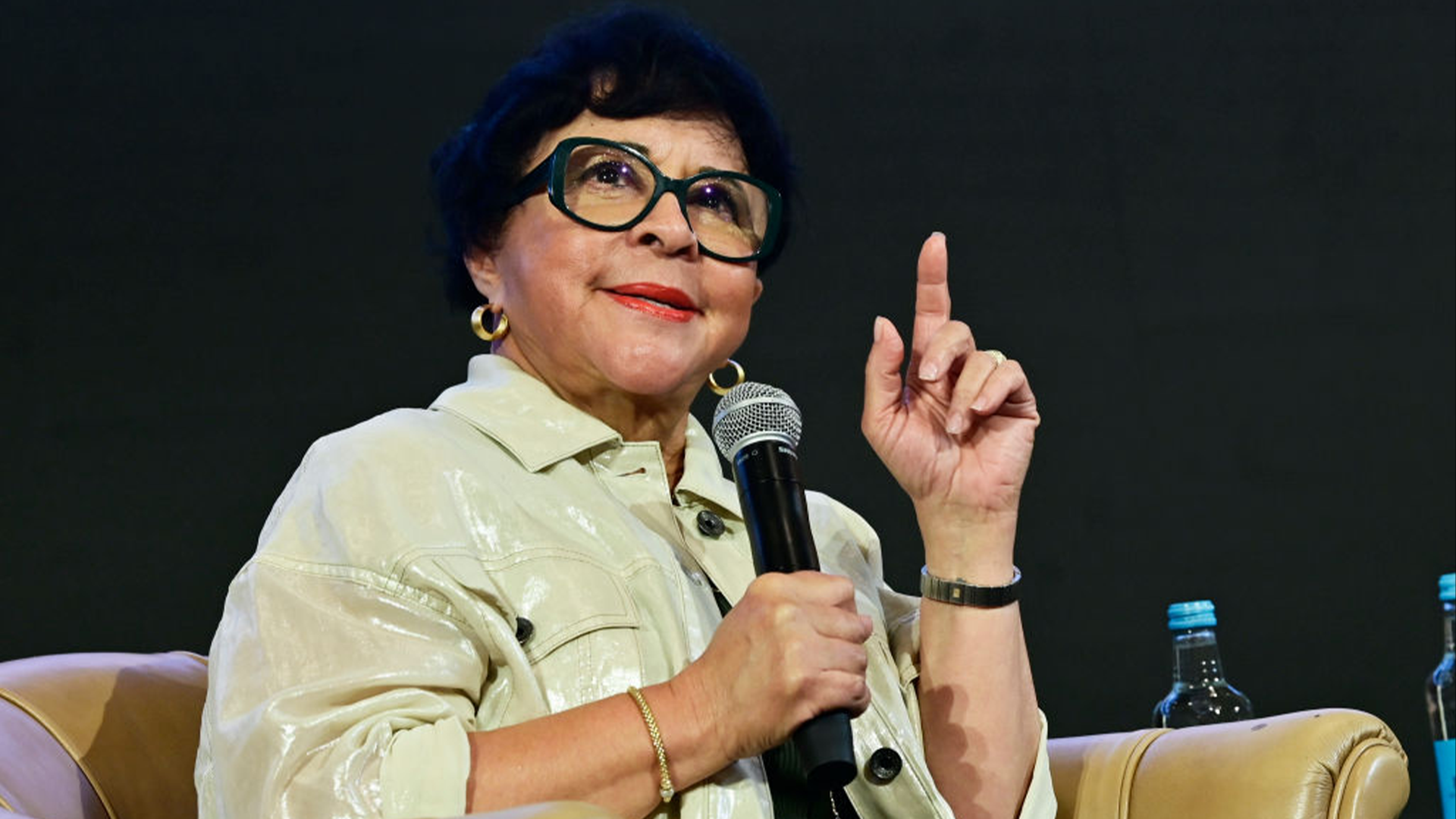

“This problem is very far from being solved,” Facebook’s chief AI scientist Yann LeCun said during a talk at Facebook’s AI Research Lab in Paris.

Additionally, LeCun said there is not enough data to train automated systems to detect violent live streams because they “thankfully” don’t have many examples of people shooting others.

Facebook’s timing could raise some issues with users, and its live stream could be weaponized again. Since the Christchurch shooting, the company has invested in better technologies to detect violent videos and is “exploring restrictions” on Facebook Live for users who have previously violated its Community Standards.

Facebook also tried to use an experimental audio technology, in order to catch copies of the video that its AI missed. Facebook said in a blog post that it “employed audio matching technology to detect videos which had visually changed beyond our systems’ ability to recognize automatically but which had the same soundtrack.”

As the company looks for more ways to combat violence on its platforms, it will be forced to race against the clock.