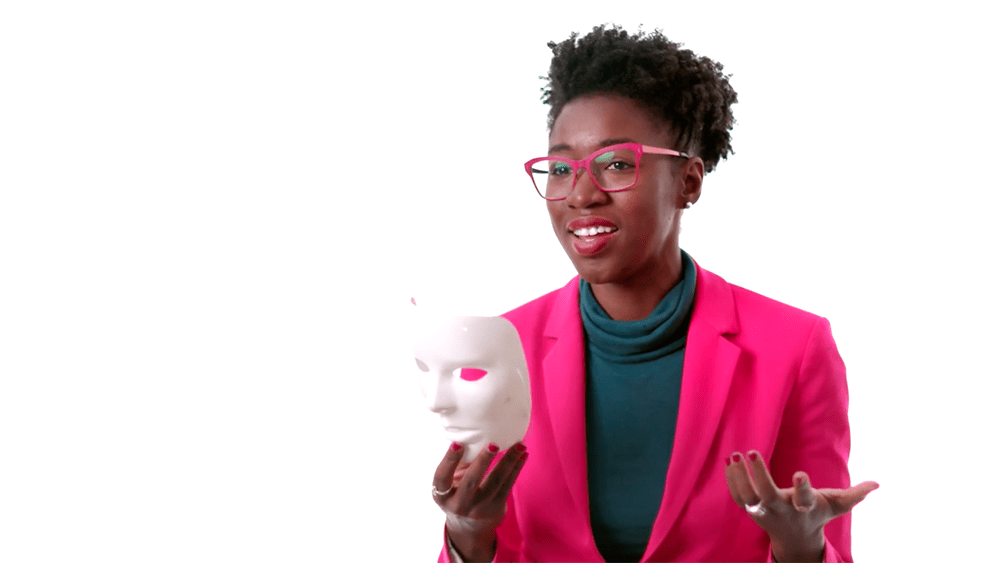

When I was a master’s student at MIT, I worked on a number of different art projects that used facial analysis technology. I soon realized that the software I was using had a hard time detecting my face. But after I made one adjustment, the software no longer struggled. That adjustment? I put on a white mask.

Why does this matter?

The social implications of this are rooted in the longstanding marginalization of Black women, a disregard for not only our gender identity, but our very humanity. And the civic implications can be life-threatening.

For example: When it comes to interactions with law enforcement, flawed facial analysis systems pose serious risks for people of color. Innocent people can be misidentified as criminals. This is not hypothetical. The Face-Off report, released by Big Brother Watch UK, highlights false positive match rates of over 90 percent for facial recognition technology deployed by the Metropolitan police. And even as recent as this summer, the ACLU found Amazon erroneously labeled 28 members of congress as criminals.

As AI technology continues to evolve, tech companies have a responsibility to ensure that their products are used to strengthen communities, not deepen racial inequities.

And we have a responsibility to hold these companies accountable. We’re not having enough conversations today about how technology is designed, developed and deployed that also consider its dangers, and how it can deepen existing inequalities.

Let’s change that. Our voices together can create a world where technology works well for all of us, not just some of us.

Watch Joy Buolamwini's video above or at the following link: https://www.youtube.com/watch?v=PaJlS1bMt8k